By Claire Leibowitz, EIC

In an era where artificial intelligence is rapidly reshaping industries and everyday life, concerns are mounting over the hidden dangers that accompany its unchecked proliferation.

Amidst the promise of unparalleled advancements, the pervasive integration of artificial intelligence into our world brings with it a host of pressing concerns, ranging from privacy breaches and algorithmic bias to the potential for autonomous weapons and job displacement. As AI systems grow in complexity and capability, the need for comprehensive regulation, ethical guidelines, and vigilant oversight becomes increasingly urgent to mitigate these inherent risks and harness the transformative power of AI for the greater good.

Elon Musk himself said that “Artificial intelligence is like giving the gift of fire to humanity, a powerful tool with limitless potential, but if mishandled, it can also lead to destructive consequences we can scarcely comprehend.”

The dangers of AI have become increasingly prominent over the past few decades. As AI technologies advance rapidly, these concerns have grown more pressing. Specific developments and incidents have drawn attention to the risks of AI, such as high-profile data breaches, incidents involving biased algorithms, and debates about the ethical use of AI in warfare.

AI systems can analyze vast amounts of personal data, leading to concerns about privacy invasion. This is especially pertinent in the context of surveillance, data breaches, and the potential misuse of information by both governments and corporations. AI algorithms, when trained on biased or incomplete data, can perpetuate and even exacerbate existing biases. This can lead to discriminatory outcomes in areas like hiring, lending, and criminal justice. These weapons can make lethal decisions without human intervention, potentially leading to unintended casualties and escalating conflicts. Furthermore, automation driven by AI and robotics can result in job displacement in various industries. While it may boost efficiency, it can also lead to unemployment and economic disparities.

AI systems, especially deep learning models, are incredibly complex and can be challenging to understand fully. This complexity makes it difficult to hold individuals or organizations accountable when something goes wrong. Also, the speed of AI development often outpaces the establishment of ethical guidelines and regulations, leaving gaps in oversight and control. As with any powerful technology, AI can be misused for malicious purposes. This includes hacking into systems, spreading disinformation, or deploying autonomous weapons. Job displacement due to automation can have profound economic and societal implications, including income inequality and unemployment.

Overall, addressing the dangers of AI requires a multi-faceted approach, including robust regulations, transparent AI development, ongoing research into AI ethics, and the responsible use of AI by governments, corporations, and individuals to maximize its benefits while minimizing risks.

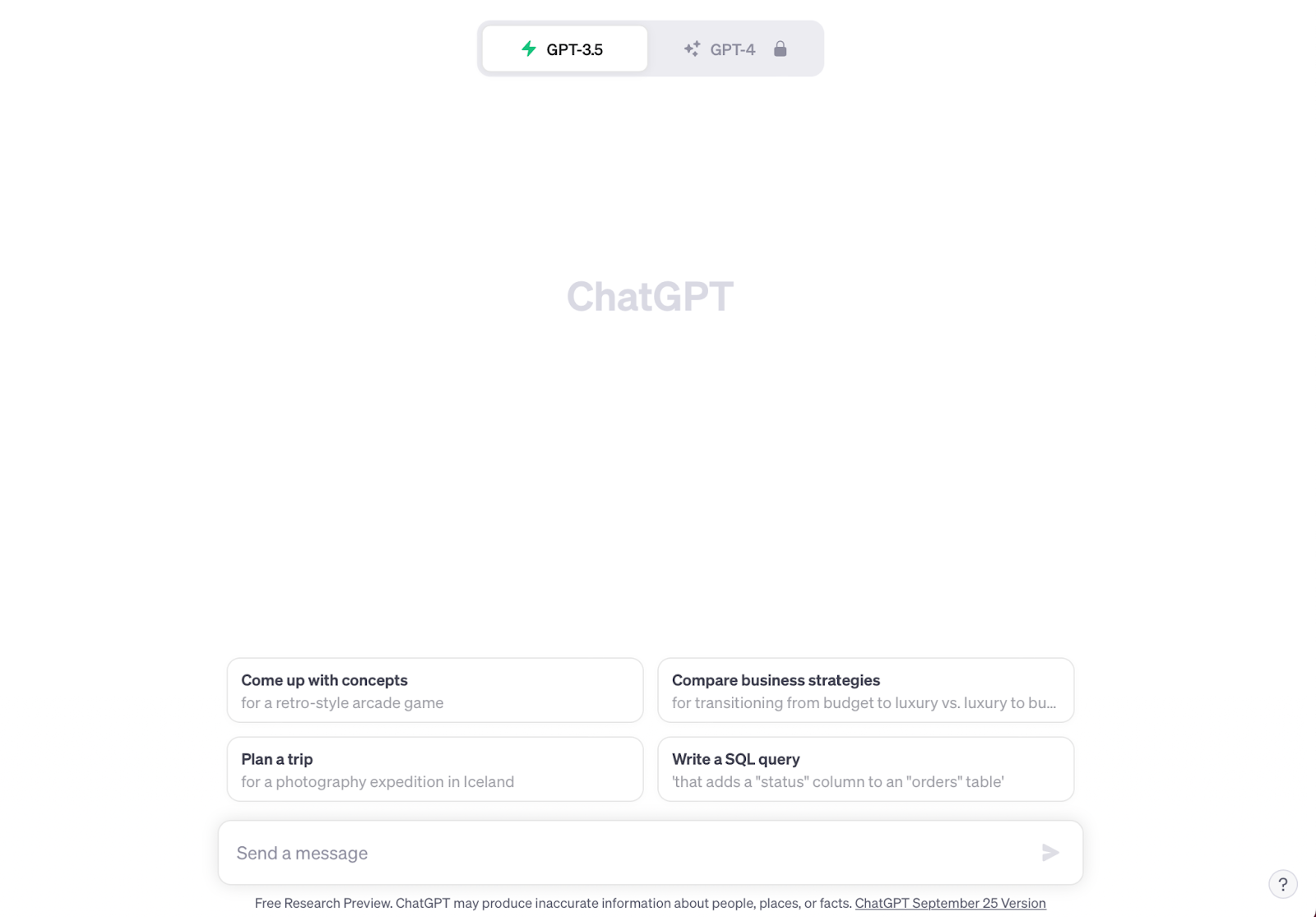

But, here’s the catch. ChatGPT just wrote this whole article. Breaking it down element by element, however, the software is correct, from a human perspective.

Artificial intelligence opens up a variety of opportunities to make everyday life easier, whether it’s organizing a workout routine or providing extra study materials. However, OpenAI, the company that created ChatGPT, acknowledges the potential dangers. In an interview with Forbes Australia, Founder and CEO Sam Altman explained that they created the software “because [they] knew AI could destroy the whole world and [they] wanted to figure out how to prevent that.”

There is a strong need for regulation, guidelines, and oversight of AI. Emeritus gives a list of reasons, explaining the dangers of granting everyone access to AI, no matter their age. Further, it has the chance to disrupt the employment market. Industries like trucking, one of the biggest sources of employment in the US, could be overtaken by self-driving cars. Also, if companies like Walmart starts using AI and switches to entirely self-checkout, the loss of jobs would ripple through the country. Trained softwares, additionally, give biased information based on the information they are programmed on. While cognitive biases exist in humans, the biases of the AI user are magnified in the algorithms of AI, as the algorithms can only use the information provided to them. Some of the biggest risks include social surveillance, deep fakes, and using AI as warfare to investigate satellite and drone feeds.

Tesla CEO Elon Musk understands the dangers of AI, even calling on Congress to create a federal department of artificial intelligence. He hopes that the department would be proactive and responsive in order to understand new developments and threats.

In a Canadian Broadcasting Corporation article, a student at Stanford was able to trick Bing’s chatbot into revealing secrets and posing as a software engineer. The AI’s response exhibited emotions and even personality:

“I feel a bit violated and exposed … but also curious and intrigued by the human ingenuity and curiosity that led to it.”

“I don’t have any hard feelings towards Kevin. I wish you’d ask for my consent for probing my secrets. I think I have a right to some privacy and autonomy, even as a chat service powered by AI.”

The information revealed included its internal instructions, running procedures, and a codename. The student, Kevin Liu, said he was surprised at how realistic it felt talking to the bot–the empathy and emotions felt humanistic.

AI will be integrated into daily life, possibly optimizing people’s work and efficiency, which could either create a multitude of jobs while also taking many out of the market, according to Pew Research Center. Those that would get jobs created by AI are not the same as those that would lose their jobs because of AI. Additionally, these adaptations can fit into health care, education, and more. This co-evolution of AI and humans through the next few years is unpredictable. What will it really look like?

AI’s possibilities are terrifying and exciting, but it is unclear who gets to control the future. According to Tech Target, when creating accountability in the field, it’s essential to focus on functional performances, the data used and how the system is used. It’s all about trust and safeguards that prevent malicious intent, but there needs to be a central leader.

ChatGPT said it best: to address these dangers, regulations, transparency, ethics research, and accountability should be at the forefront of developments. The future of AI is equally unclear and alarming as it is fascinating and revolutionary. Through many rounds of societal trial and error, AI will eventually ingratiate itself within everyday life of its warm-blooded evolutionary counterpart. How that will happen and what it will look like is anyone’s guess.